In a 1961,

Frank Drake introduced the following

equation for the number N of civilizations in our galaxy with which radio-communication might be possible,

N = R x

Fp x

Ne x Fl

x Fi x

Fc x L

As described by the

SETI institute,

- R is the average rate of star formation in the galaxy,

- Fp is the fraction of those stars that have planets,

- Ne is the average number of planets that can potentially support life per star that has planets,

- Fl is the fraction of planets that could support life that actually develop life at some point,

- Fi is the fraction of planets with life that actually go on to develop intelligent life (civilizations),

- Fc is the fraction of civilizations that develop a technology that releases detectable signs of their existence into space, and

- L is the length of time for which such civilizations release detectable signals into space.

Drake's purpose in writing this equation was to facilitate discussion at a meeting. Its importance is not the numerical prediction of communicative civilizations in the galaxy (note there are 7 factors in the equation and errors in each term will combine to make any calculation wildly uncertain) but rather in the framing of issues related to the search for alien life. That said, the

equation tells a story. Assuming that these are the relevant factors, then if any the

terms are zero, N is zero and we are likely to be alone. If none of them are zero, then

even if they are exceedingly small, there is a chance that

there is life somewhere in the galaxy. Moreover, it's unlikely that any of these terms are zero, given the huge size of the galaxy. In epidemiological terms, then, the equation helps to frame our thinking about the potential prevalence of life in the Milky Way galaxy.

Given that NASA

opined recently that we're likely to have strong indications of life beyond Earth within a decade, it made me

wonder about Drake-like equations in medicine and epidemiology. As a

toy example, suppose that we write the number of patients contracting hospital-acquired infections (HAIs) yearly in the US as the product of several factors, say

N = Nhospital visits x Pcontact x Pdevelop disease x Pdisease reported

where

- Nhospital visits is the number of patients visiting hospitals annually,

- Pcontact is the probability that a patient comes into contact with infectious material (e.g., via environmental contamination or an infectious patient or HCW)

- Pdevelop disease is the probability of developing disease if infected, and

- Pdisease reported is the probability that an infection is recognized and reported.

According to the

CDC, there are 35.1M hospital discharges annually in the US, so N

hosp visits~35M. Now suppose that P

contact and Pdevelop disease are both low, say 1% , and that we have excellent surveillance so that P

disease reported ~1. If that could be true, then we would expect to see 3,500 HAI per year. We should be so lucky. Being more realistic, however, might yield a 10% change of coming into contact to infection, P

contact~0.1, and a higher probability of contracting disease if infected, say 50%, so that Pdevelop disease ~ 0.5. In that case we get N=1.75M HAI annually, which is close to the CDC estimate of 1.7M.

How could this be decreased? The number of hospital visits, N, is unlikely to decrease drastically, so that's not really a control variable. Perhaps we could develop interventions to decrease P

contact and Pdevelop disease. Obviously there is tremendous focus on reducing P

contact through handwashing, alcohol based had rubs, contact precautions, better environmental cleaning, etc already. If P

contact could be reduced by a factor of 10, from 0.1 to 0.01 -- seemingly a tall order -- N could be dropped to 175K. That may not be possible, but suppose we could achieve a factor of 2 improvement so that P

contact ~ 0.05. If we could combine that with a similar decrease in P

develop disease by, say, better use of antimicrobials, then N could in turn fall from 1.7M to 438K. Thus, combination strategies could have great impact.

This is simply a back of the envelop calculation: the

equation above is but an approximation and the estimates are completely arbitrary. Moreover, parameters will vary from facility to facility and even between patient populations (imagine how P

develop disease is likely to vary between transplant versus general surgery patients). That said, this toy model illustrates a

simple point: Breaking a problem down into smaller pieces can be helpful in thinking about it.

While this

way of thinking is not alien (pun intended) to biostatistics and epidemiology, and

clearly has limitations, I think it's helpful for framing issues in

one's mind. In addition to clearly laying out assumptions in whatever is being contemplated (in this case, HAI), toy model approaches can suggest what may be needed in order to get a better answer.

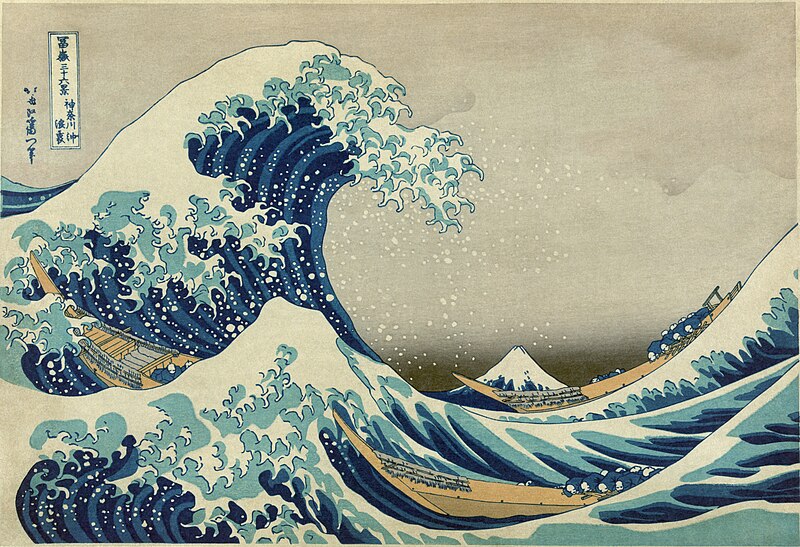

(image source: Wikipedia)

In a 1961, Frank Drake introduced the following equation for the number N of civilizations in our galaxy with which radio-communication might be possible,

In a 1961, Frank Drake introduced the following equation for the number N of civilizations in our galaxy with which radio-communication might be possible,